double-descent

We investigate double descent more deeply and try to precisely characterize the phenomenon under different settings. Specifically, we focus on the impact of label noise and regularization on double descent. None of the existing works consider these aspects in detail and we hypothesize that these play an integral role in double descent.

Understanding Double Descent

We pose a particular question: can we mitigate double descent by applying adequate regularization in the case of noisy data? Further, we also plan to investigate if suitable regularization can bias the trajectory of gradient-based optimization algorithms in such a way that double descent can be mitigated, i.e. we do not observe an intermediate dip at all or observe a very small intermediate dip in the test performance.

Background

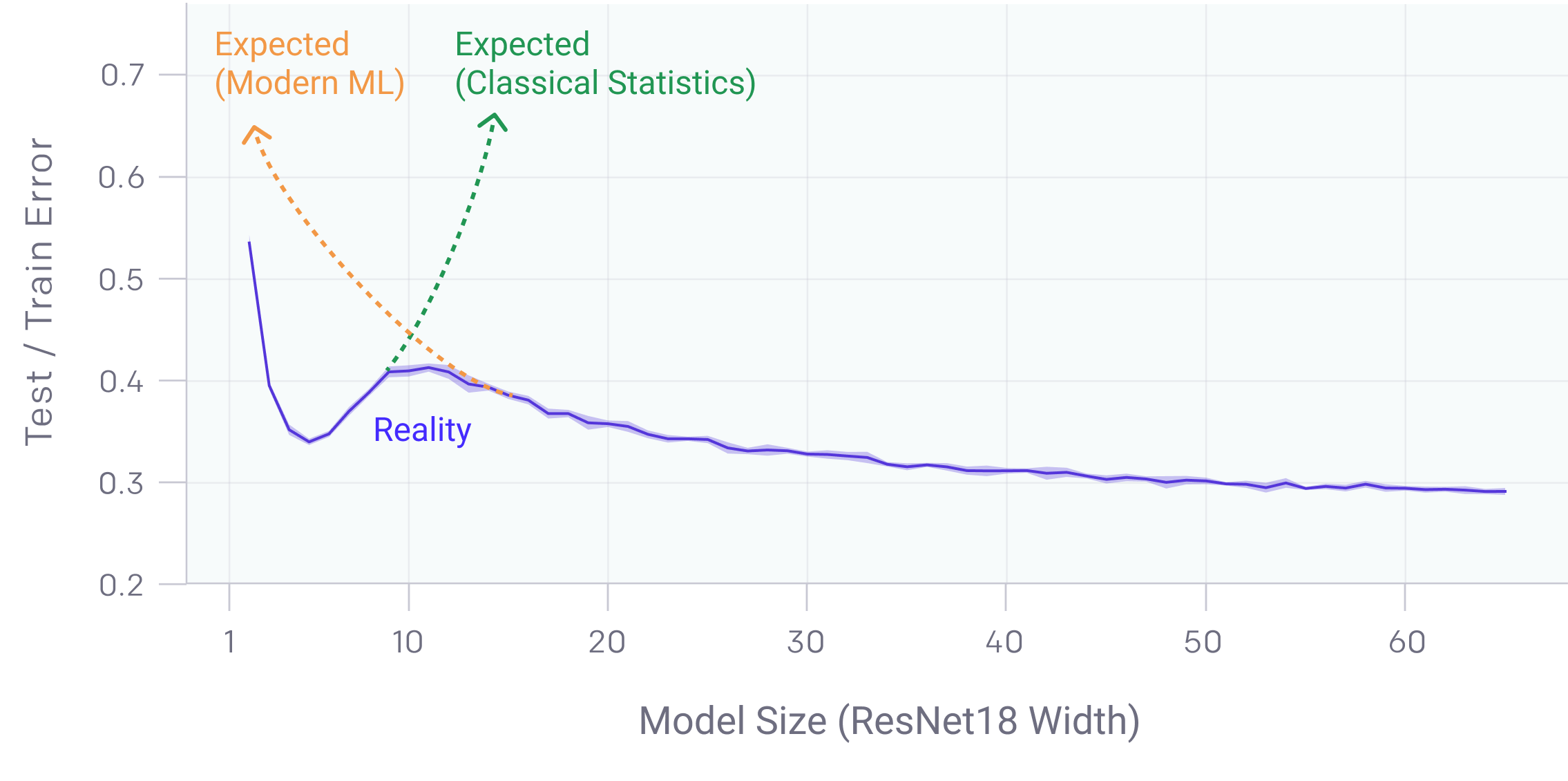

We focus on the phenomenon of double descent in deep learning wherein when we increase model size or

the num-ber of epochs, performance on the test set initially improves(as expected),

then worsens but again starts to improve andfinally saturates, which is against conventional wisdom.

This phenomenon has already been demonstrated on traditional machine

learning models [Belikin et.al.],

[Belkin et.al.] but more recently was also observed in complex deep learning models

[Nakkiran et.al.].

There have also been attempts at mathematically explaining

double descent for simple linear regression settings.

We focus on the phenomenon of double descent in deep learning wherein when we increase model size or

the num-ber of epochs, performance on the test set initially improves(as expected),

then worsens but again starts to improve andfinally saturates, which is against conventional wisdom.

This phenomenon has already been demonstrated on traditional machine

learning models [Belikin et.al.],

[Belkin et.al.] but more recently was also observed in complex deep learning models

[Nakkiran et.al.].

There have also been attempts at mathematically explaining

double descent for simple linear regression settings.

DataSets

We shall try to reproduce the limited existing results from OpenAI [Nakkiran et.al.] which use commonly used datasets such as CIFAR-10, CIFAR-100, etc. on common architectures such as ResNets, VGG, etc. We shall also try to observe this in simple problems such as linear regression. More importantly, we shall try to observe its variation with different kinds and degrees of regularization as well as different noise levels. Existing works do not consider regularization in too much detail but we think it is a critical factor controlling the extent of double descent.